Pexote Jack

A 100% AI Film Project

When deciding on a project for my CG Pro class, I wanted to make something completely with AI, without viewers knowing it was made completely with AI.

Workflow:

Backgrounds - mostly FLUX Dev in Comfy w/ lots of outpainting

Character animations - first frames w/ FLUX Kontext, composited onto chroma key backgrounds then animated using JSON prompts w/ Google VEO 3, w/ lots of still-capture to use as next I2V frame to continue animation

Vertical screen animations - All Midjourney, w/ same still-capture process for the ones over 20 seconds.

Voice & sound effects - All Eleven Labs lip synced to Veo's outputs.

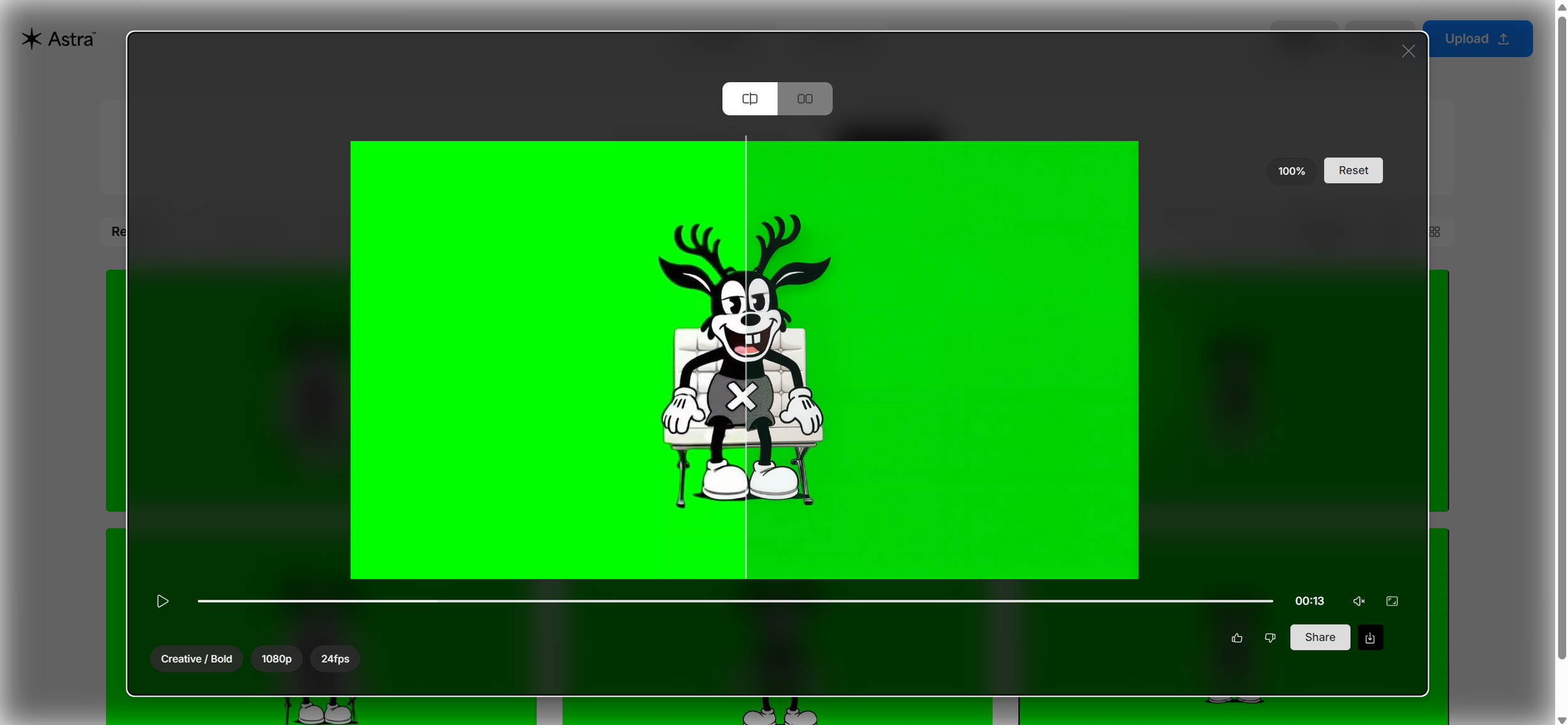

Upscaling with Topaz Astra

Compositing - Davinci Resolve & Fusion

The Making of Pexote Jack

Episode Synopsis:

A 1930s cartoon character shows up for a meeting thinking it's his big comeback, but the exec reveals they've already created an optimized AI version of him using his past films.

Project Setup

To get started, I setup a Project within various LLMs to help manage prompting, as well as technical help. This was incredibly important, as prompting became more complex as the project went on.

I started off with a Project in Claude, which was super helpful to have the instructions compiled and the project all in one place.

As time went on, I began to migrate over to ChatGPT for prompts, etc. I found the face that ChatGPT can access the internet in real time is SUPER helpful for AI, where things change constantly. For example, giving ChatGPT instructions to go find prompting instructions for different models, like FLUX Kontext, and to use those instructions when writing prompts for me was super helpful.

Screenplay:

I've played around before w/ LLMs and screenwriting, but having Projects set up in various LLMs was the power I had been lacking in this department before. Also, I started as a writer, so I'm less reliant to lean on AI for screenwriting in general.

However, one of the really fun experiments with this episode was this - I gave each LLM a different persona:

Claude: Charlie Kaufman (existential)

ChatGPT: Joel & Ethan Coen (dark humor + intricate plot)

Super Grok: Dan Harmon (unhinged w/ pop culture references)

Then I gave them the instructions to rewrite my original script using UCB's sketch comedy principals, and give me back the rewritten draft. Then, I took each draft, and had the different LLMs give "notes" / critiques to each others' drafts. Then, I kinda did that process a few times, then made my own notes, and rewrote the whole thing from scratch.

Overall, I'd say it was pretty effective - there might be like 3 or 4 lines of dialogue that came from the process, which is alot in a 5 minute sketch comedy, (and a screenriter originally, dialogue is something writer's are generally super precious about.)

ComfyUI:

I have nothing but good things to say about Comfy. It's incredibly powerful, yet simple once you understand it. I recorded myself making this project each day (forgot occasionally,) so I have HOURS of footage of me making Pexote Jack, the majority of which is in Comfy. Once I'm finally finished with my the final cut, I want to create a "Making of" video where I can explain some things more in detail.

But put simply, there are simply too many things from Comfy to even begin to share here, so more on that soon. But above is a video of me sharing a specific FLUX workflow with the class, utilizing different LoRAs together with a Canny Edge control net for character design development.

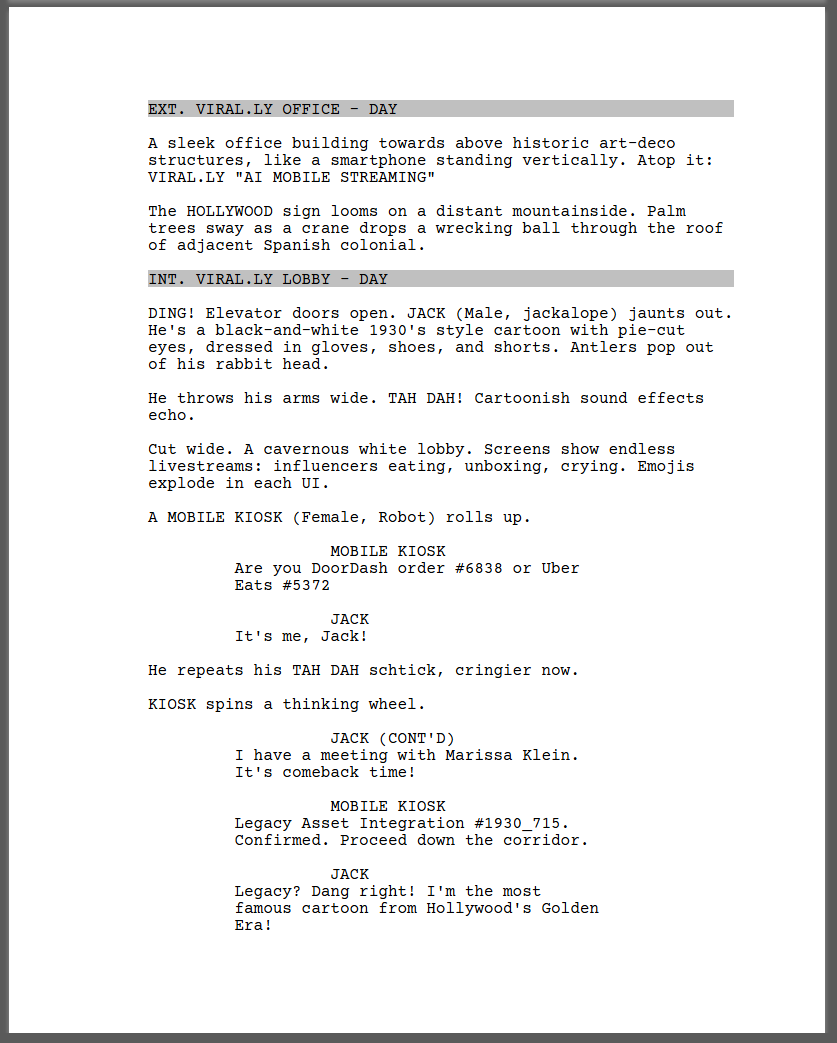

Character Design:

I had already created the titular character in 3D for a short I made in Unreal Engine, (you can see it on my Director page,) but that was made with AI as well. I started with a sketch I made in Procreate, turned that into a 3D model with Kaedim, then rigged it, and finished off the black and white design in Unreal Engine.

But he was always meant to be 2D, cel style. And now with AI, I felt I could make that happen. So using the 3D model, and trying hundereds of different iterations, I finally landed on a design that became the hero design above.

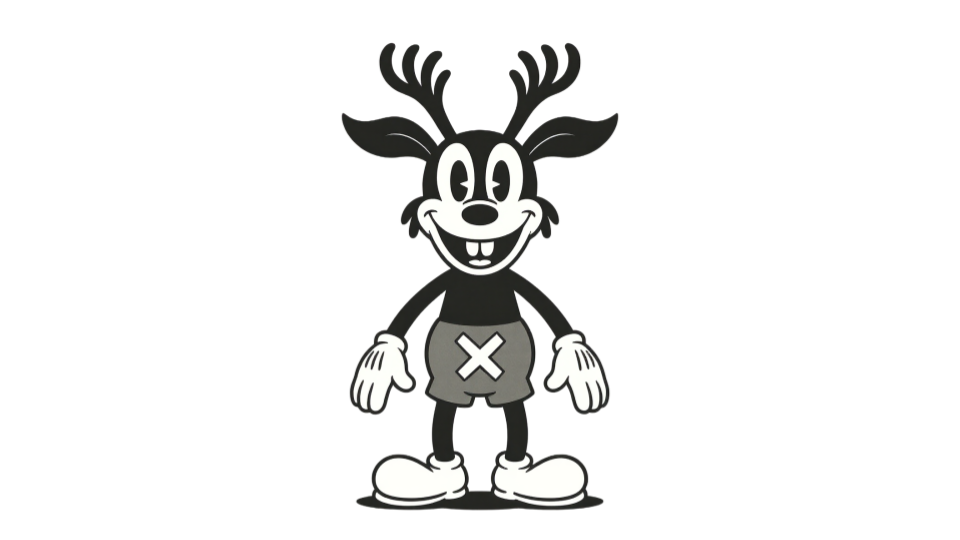

And once I had the hero design, I was off to the races using FLUX Kontext for character sheet creation.

You can see the full character design exploration in this slides deck I put together.

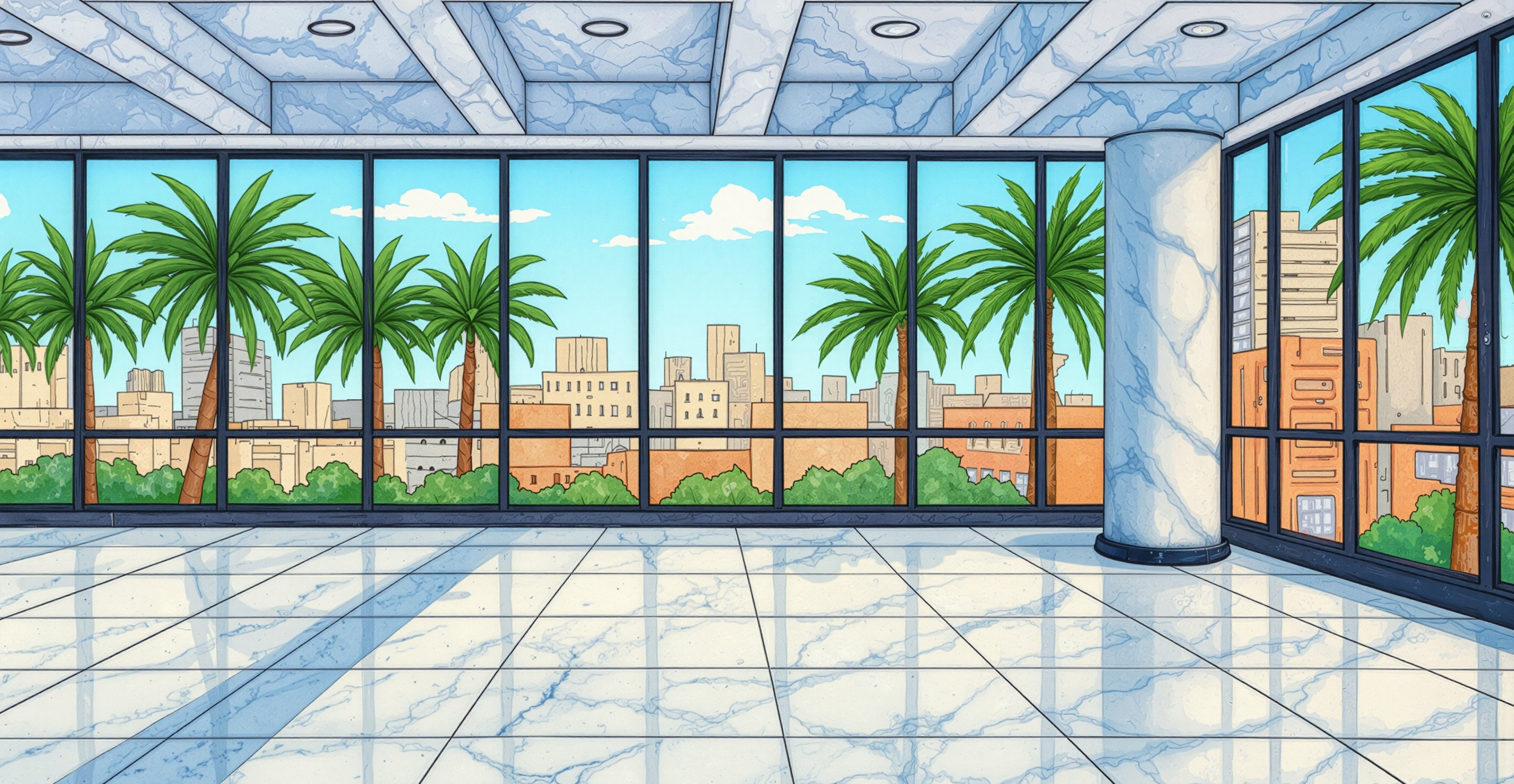

Backgrounds:

To develop a 360 degree view of the main office setting for shot / reverse shot use in the film, the workflow I eventually landed on was text-to-image prompting in Midjourney for photorealistic imagery that matched the location, which were then used for control nets to generate 2d cel style animation backgrounds.

That, and like 2K iterations, and ALOT of FLUX Outpainting.

Image 2 Video & Google Veo 3 JSON Prompting

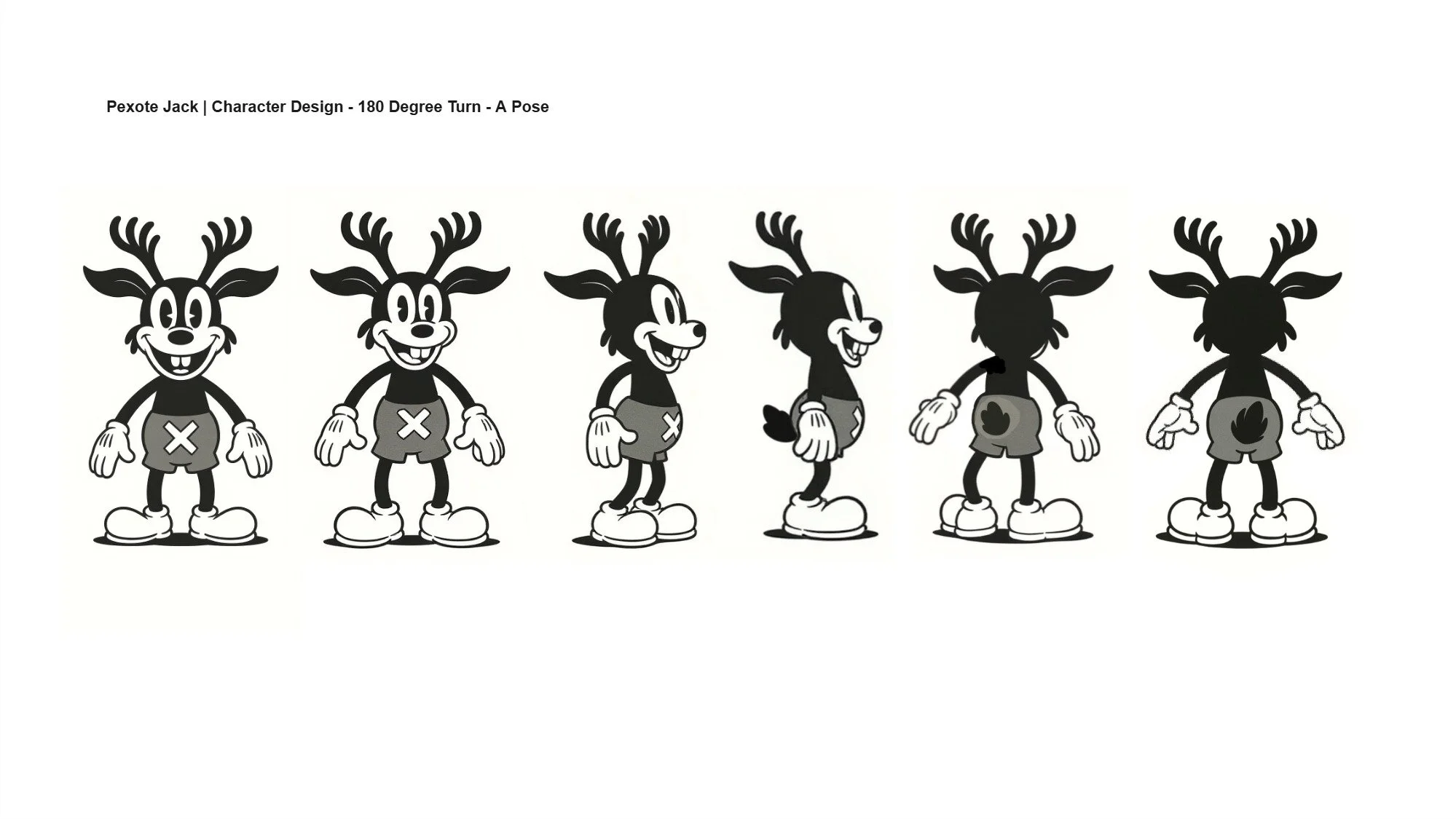

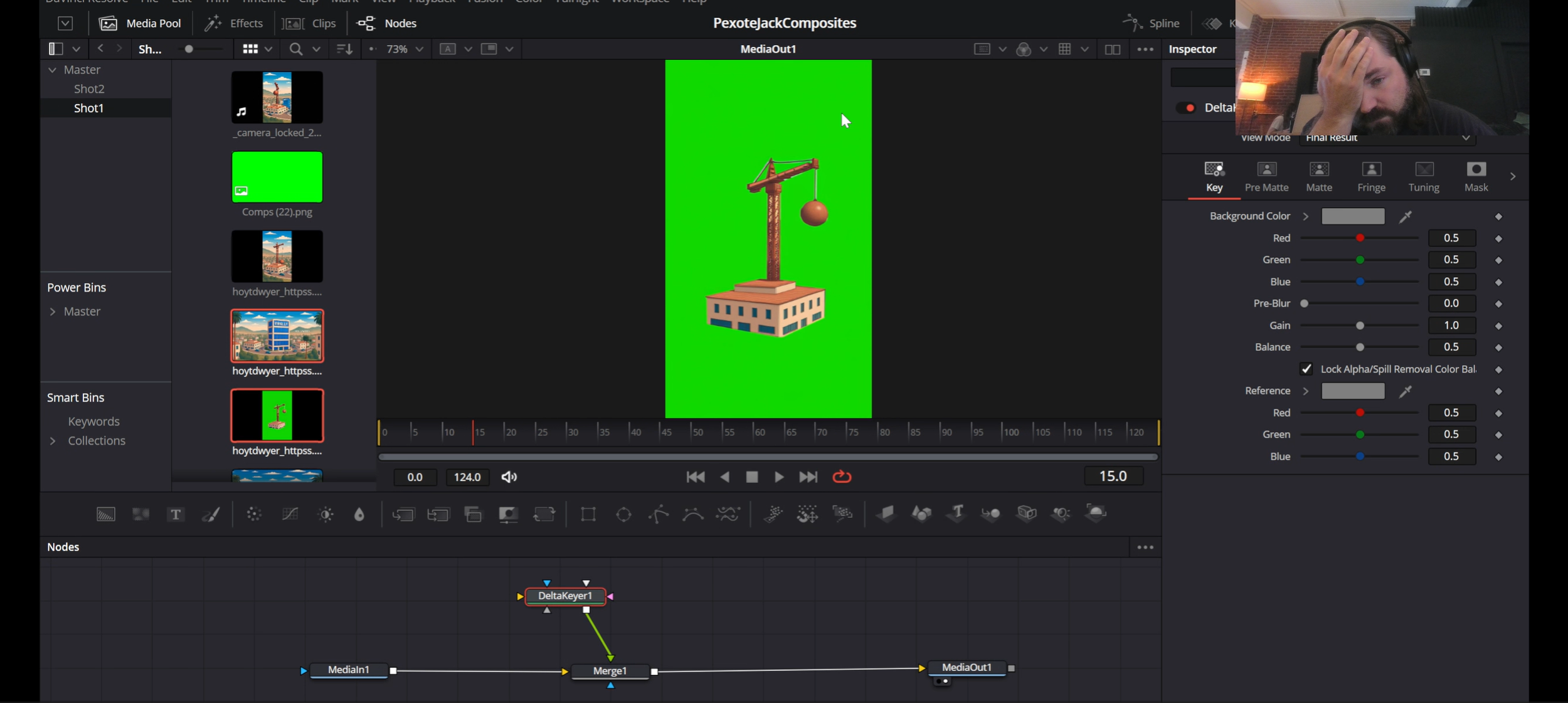

Once I got into a good workflow for Image 2 Video, I'd start with the character on a chroma key background, which I've been separating out in Davinci Resolve / compositing.

After trying Midjourney, Runway, and Google Veo, I landed on Veo to be the best for this project. Mostly this was due to the power of JSON prompts, which seem to really help Google be specific on what it is you want each character to do.

I'm not sure why JSON prompting is kinda getting scoffed at? Maybe because it's reminiscent of the "prompt engineer" fad from a few years ago, but I found JSON prompting to be extremely powerful w/ Google Veo 3. Notably, for now, Midjourney can't digest prompts this long and specific, which really limits what that platform can do.

Upscaling:

Just now getting into Upscaling w/ Topaz labs, but it does a pretty good job on Jack, except his "pie cut" eyes got tanslated into different shapes in the upscaling process, which is too specific of a character design for me to use. For Marrissa's character, I'm expecting it will be much better, and am planing on doing as run through of all her final clips w/ Topaz Astra.

Once I'm done with the final composite, I'm planning on experimenting with upscaling the entire scene when it's finished.

Dialogue & Audio:

Eleven Labs has come A LONG way. Utilizing LLMs prompting, I was able to design a custom voice for Jack - that I love - and with a few small tweaks in Davinci Resolve, I'm able to up the pitch to be more cartoony, etc.

Also notable is the Sound Effects Eleven Labs is able to generate. Normally, things like sound effects are one of those things that requires TONS of time to find the right ones, get them royalty free, etc. So being able to just generate them is a huge, huge, huge time saver.

Anyway, using the audio generated from Google Veo was super helpful for lip syncing the animations, which is another huge time saver in post.

Compositing and Post:

Sine I decided to use a Chroma Key approach, there's obviously a lot of compositing that goes on. I'm really comfortable in Davinci Resolve, so did almost everything in Fusion.